Editing is like flossing. You know you need to do it, but it’s a tedious, detail-obsessed process that makes you question every choice you’ve ever made. Was that scene good enough? Is it “Underarmour” or “Under Armour?” And, perhaps crucially, how many times can one character gaze during a paragraph? Can’t they just look, for God’s sake?

Intro

For those of us who want a little help cleaning up our work without draining the joy out of it, there’s good news: we’ve got technology! We don’t want something to floss for us, but to make sure we don’t end up with spinach in our plot holes. Tools like Grammarly and ProWritingAid are great for catching the basics, but if you want something smarter, faster, and bordering on sorcery, let me introduce you to Large Language Models—or LLMs. They don’t just clean up typos; they help refine flow, tone, and clarity while retaining your individual voice.

LLMs like Claude and ChatGPT can handle way more than just fixing typos. They can help refine your sentences and spot clunky phrasing while preserving your tone. But they’re not perfect, and they’re definitely not for every situation. In this video, I’ll break down when and how to use LLMs, where they shine, and where they fall flat.

Start with Why

So, where do these tools fit into your editing journey? If you just want to know which tool to use, the TL;DR is:

- Claude is what you want when precision and minimal usage are key.

- ChatGPT is best when flexibility and dialogue are essential.

- At no time are ‘free’ locally run models good as copyeditors.

There are reasons you might want to edit your work:

- You need a basic English spellchecker and grammar observer that can pick up in-context errors. Typically, this is where ProWritingAid and Grammarly play.

- You need a copyeditor to look at sentence structure/efficiency, echoes, and factual errors (is it Underarmour or Under Amour?).

- You need a style editor to help identify where scenes are lacking nuance (the ‘vibe’ of an action scene, or the sensory impact of a character on a downtown city street see/smell/hear).

- Maybe your writing is just shit, and you need advice on how to un-shit it.

Longer version

There are various LLMs (Large Language Models) on the market. Some you can run yourself, and others are hosted in the mighty cloud, most often with a fee.

- The ones you can run yourself are pretty useless at anything other than content generation. If you’re a writer who doesn’t know how to write, you might be in the wrong field, but hey—you can use local tools. Personally, I’ve had nothing but pain and aggravation trying to use local LLMs for copyediting. They want to change the prose too much and won’t honour the prompt.

- Of the cloud-hosted ones, the two good choices I’ve found are Claude and ChatGPT. You can’t meaningfully use either unless you pay, so be mindful of that, but both give you a free option that will let you work out which one’s best for you. It’s more appetiser than the main meal, so prepare your credit card.

- Claude excels at following the prompt; it’s best at this and produces the cleanest output. It has more stringent daily limits, so you can’t use it as much.

- ChatGPT balances managing clumsy prose and adhering to prompts, which is why I’ve chosen it. However, you need to use the new o1 models to get this, so you won’t see the benefit if you are just on the free plan. In my experience, it’s better at style enhancement and identification, but can sometimes hallucinate.

What is Copyediting?

Copyediting is not copywriting. Writing copy is something quite different, and many people are using LLMs to do just that. It’s why so many websites appear to be copy-pasted versions of each other, and why the Internet is almost 100% useless now.

Copyediting is the pass of your work where we check for spelling, grammar, basic fact-checking like brand names, and sentence structure and flow (ideally to remove overly clumsy prose and echoes). Echoes are the use of the same word or same-sounding word close together, and jar the reader.

Bearing in mind that the fourth category—un-shitting your work—is not something I’m interested in, I’ll just ignore that and the local model category, focusing on the cloud services.

So, what we want is to get an LLM to look at what we’ve written, and edit it while understanding context, and not edit our words and provide ‘helpful’ advice on making it better. They largely suck at great prose, so unless you’re quite new on your writing journey, they’re unlikely to offer much value as a stylistic editor or copywriter.

The thing about LLMs is they learn from the internet—aka the world’s largest collection of mediocre writing. They’re trained on a base average of human output, which means if you’re already a good writer, these tools might sometimes feel like asking a robot to critique Shakespeare. ‘Too many metaphors, Bill. Consider simplifying.’ But even the best of us have off days, and that’s where LLMs can catch rogue commas or accidental echoes before your editor throws something at you.

Seriously, Richard. Stop using “gaze.” It’s creepy.

The Prompt

There’s a graphic I remember an old boss sharing with me, which I loved.

We want to engineer a response from the machines that doesn’t edit our carefully chosen words. They really want to do this and deeply desire it! So, we need to stop that. Let me share the prompt I’ve built to do this, and then I’ll go over why it works so you can change it to suit your needs.

Please proofread and edit the text, focusing on correcting spelling, grammar, echoes, and ensuring smooth flow. Do not make any stylistic changes or suggestions, including altering profanity, expletives, or offensive language. Please refrain from changing the original tone, voice, or structure of the text. If you see clumsy prose, suggest an alternative, but separate this out from your other suggestions.

This is UK English. It is a work of fiction. The tone is informal (casual and conversational). Provide your responses in the following format and broken into manageable 50-200 word chunks:

Original text

Suggested edit

Detailed rationale for the change

Once you’ve finished your editing pass, finish with a summary of the text, including areas where you’re uncertain of what’s happening and/or the scene is unclear.

Here is the text to edit:- Please proofread: this section is self-explanatory. We want it to treat this as the most important facet of our editing.

- We’re going to be super specific about not changing anything. Most LLMs are put into the world by hyper-conservative megacorporations, and they will deeply want to change all of your instances of the word ‘fuck’ into something else. If you’ve carefully planted a field of fucks, you don’t want the LLM to make that barren.

- The tone is important. They understand tone pretty well, but if you forgo this line, they will often tell you pieces of your prose are incorrect. This is vital if you have characters that think or speak aloud; if you omit this, it will change all the prose into riveting business case speak.

- We want them to identify clumsy prose, but clearly separate it from spelling and grammar. Spelling can be objectively incorrect, but clumsy prose can be subjectively valuable.

- We set the writing language. If you’re not speaking the King’s English, you’ll want to set this to your regional dialect.

- We identify this as a work of fiction because otherwise the LLMs get all wrapped around the axle on content.

- Similarly, we identify the tone, because the LLMs know about style guides and will favour formal writing over creative text. We’ve already told it not to change our tone, but we also want a reference point so any suggestions have merit.

- Now, the money shot. We want to break apart the feedback it gives us so we can analyse it, and choose which bits we want to accept. By default, they’ll just vomit a whole chapter back at you. This section tells it to separate the feedback into logical chunks a human can parse, and then shows the original side-by-side with the suggestion. We also want it to tell us why it made the change, so you can understand whether accepting the suggestion is correct.

- Finally, we want to get it to provide a summary. This lets me see if I stuck the landing on the chapter’s intent by whether the LLM understands it. If your chapter sucks, it will tell you here whether it could benefit from clarity enhancements.

- Then we paste the entire chapter in underneath. We only do a chapter at a time, as ChatGPT sometimes hallucinates with longer blocks, and Claude churns through a lot more usage credits if you have more material.

Strengths of each LLM

ChatGPT was trained in a system using humans to rate its responses, which has led to it providing the industry standard for most human-like output. It sounds like a person, and is great at generating human-sounding output, especially dialogue. It does, however, hallucinate, especially with older models and longer content. To combat this, we want to use either o1-mini or o1-preview. The o1-preview is excellent, but currently limited within the month; o1-mini is fine and doesn’t have a limit.

Claude’s been trained differently and, in my experience, is best at prompt adherence and doing the job right. I’ve yet to have it hallucinate, and the output is the cleanest and fastest to work with. It’s severely limited in how much you can use daily, even with a subscription, so I can’t rely on it—but if you edit less per day, then it’s the best choice.

In summary:

- Claude provides the best copyeditor of the two, but

- ChatGPT is good enough to succeed and doesn’t have painful content limits. It’s better at dialogue suggestions, and so edges out as the one I pay for.

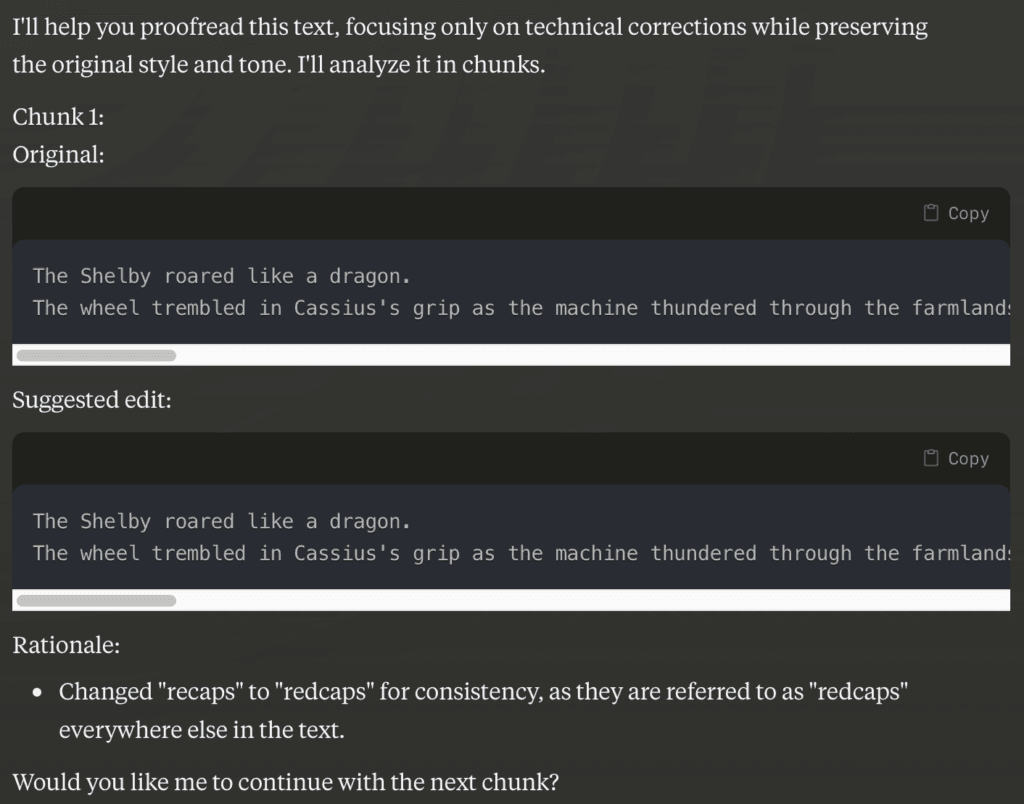

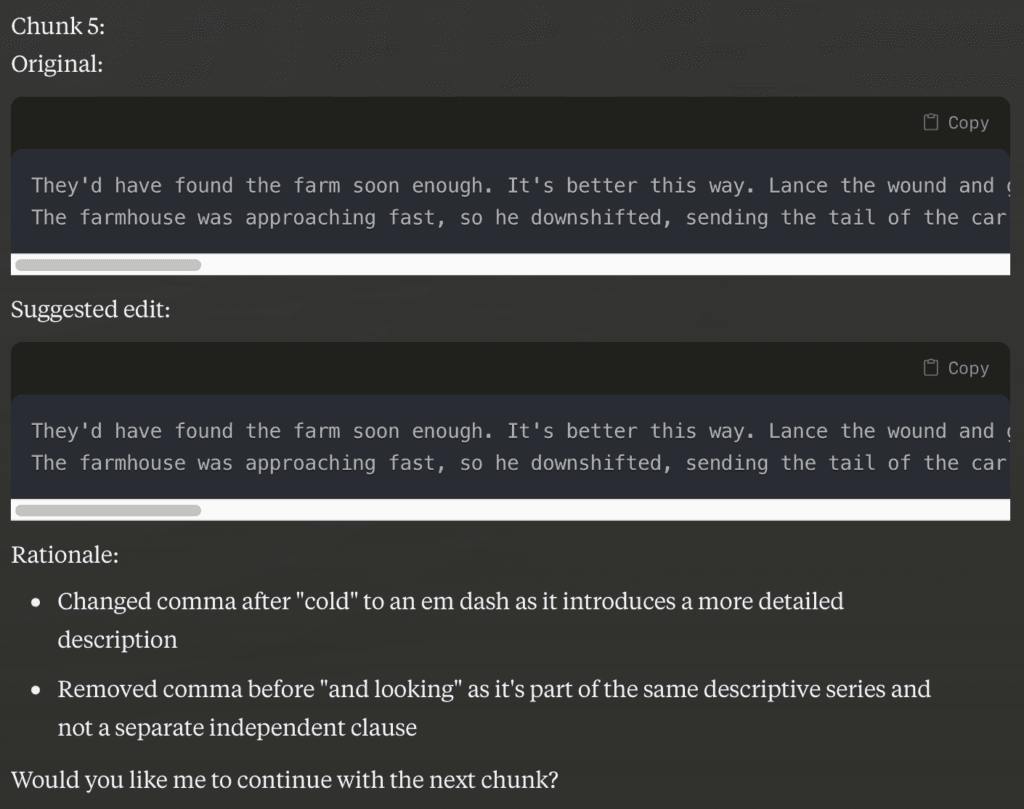

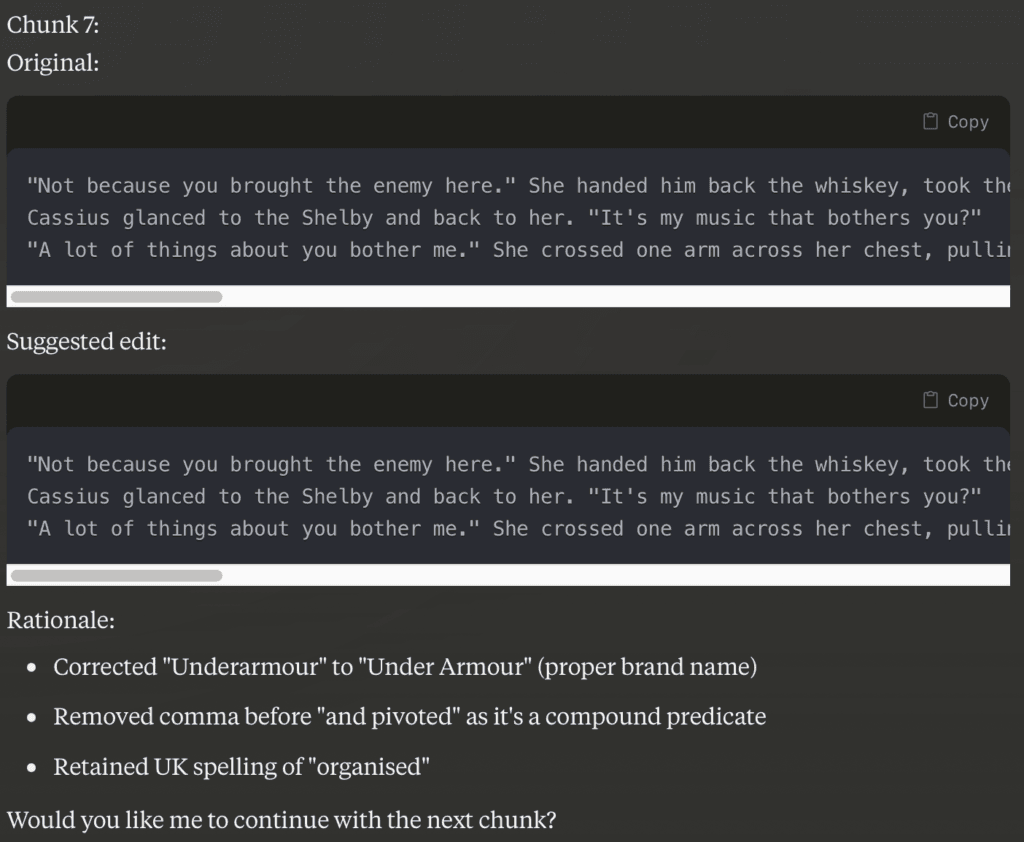

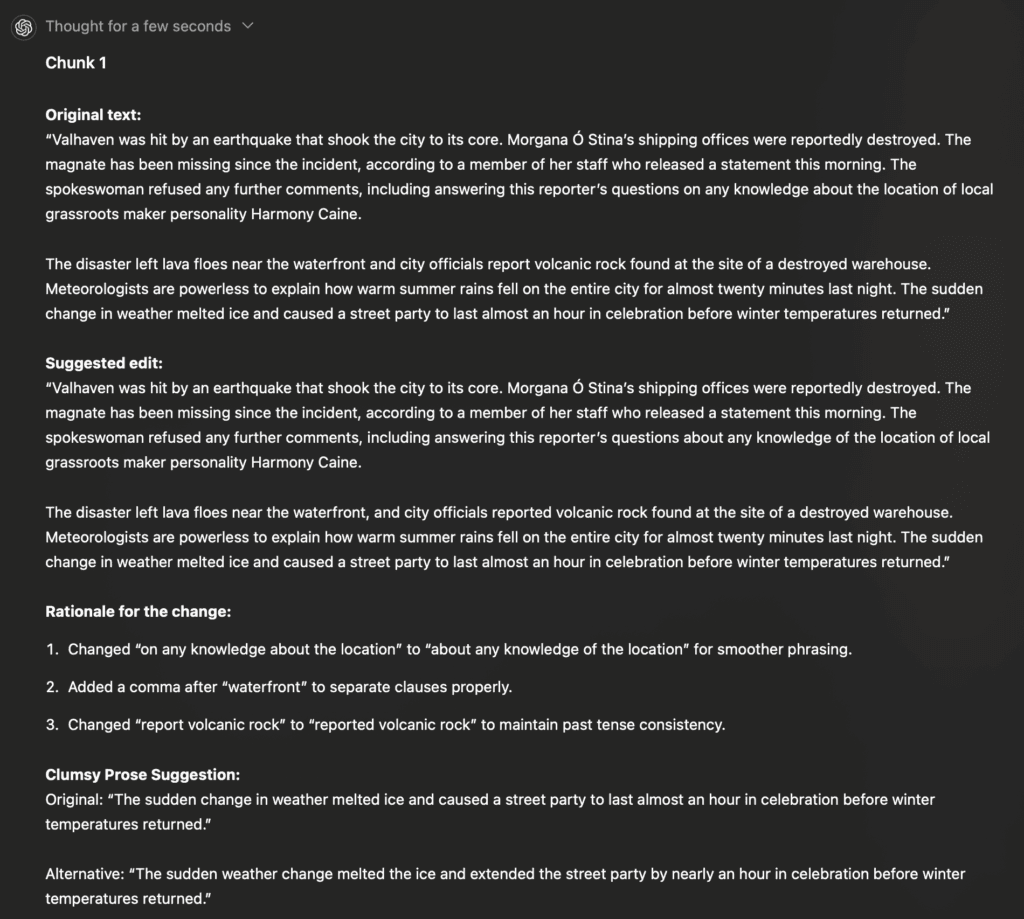

Typical output

Here’s the typical output of Claude. Honestly, I feel this is pretty peak perfection, but the limiting factor of Anthropic’s model is the cost. My chapters are 1,500–3,000 words long, and this means Claude is limited to roughly five chapters a day, even with a subscription. I need more than this when I’m editing, but if your workflow does fewer chapters a day, this might be fine.

You can see how well it understands context; it knew recaps should be redcaps despite recaps being a legitimate word. It knows when to capitalise Spartan. It’ll deal nicely with my shitty use of commas. We’ve told it to use UK English, so it understands rear-hyphen-view is more common there. It correctly understands how to use an EM dash. It knows about brand names.

The only real downside here is that it waits for you to continue before going on, so you’ll need to keep telling it to continue between chunks. Now, let’s check out ChatGPT with the o1-mini model.

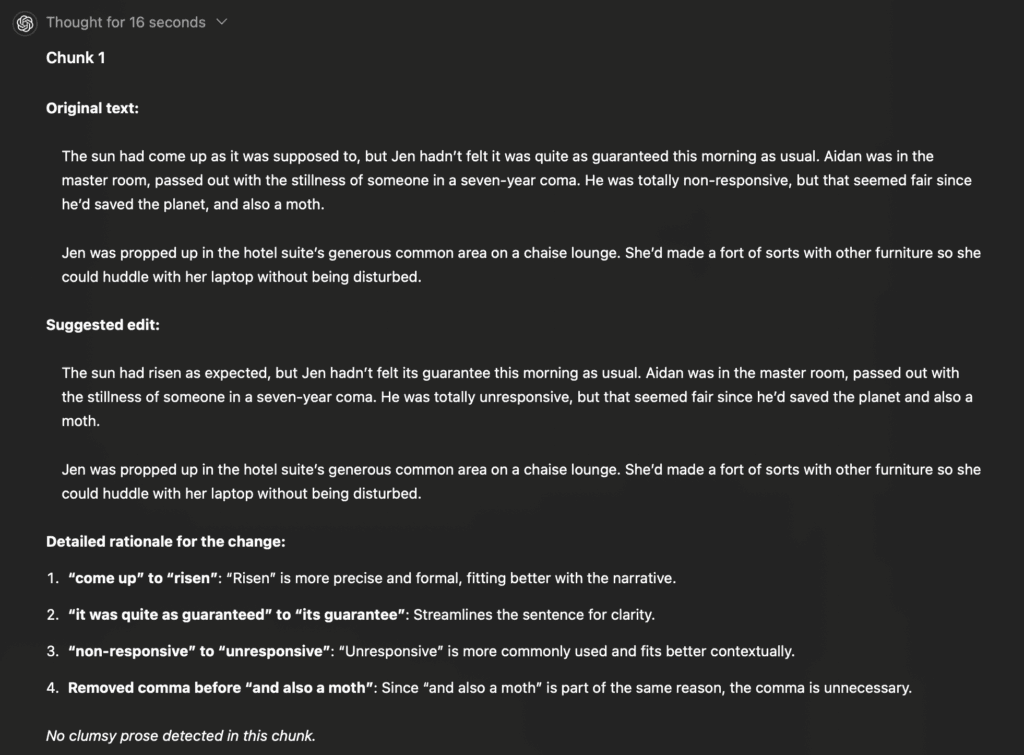

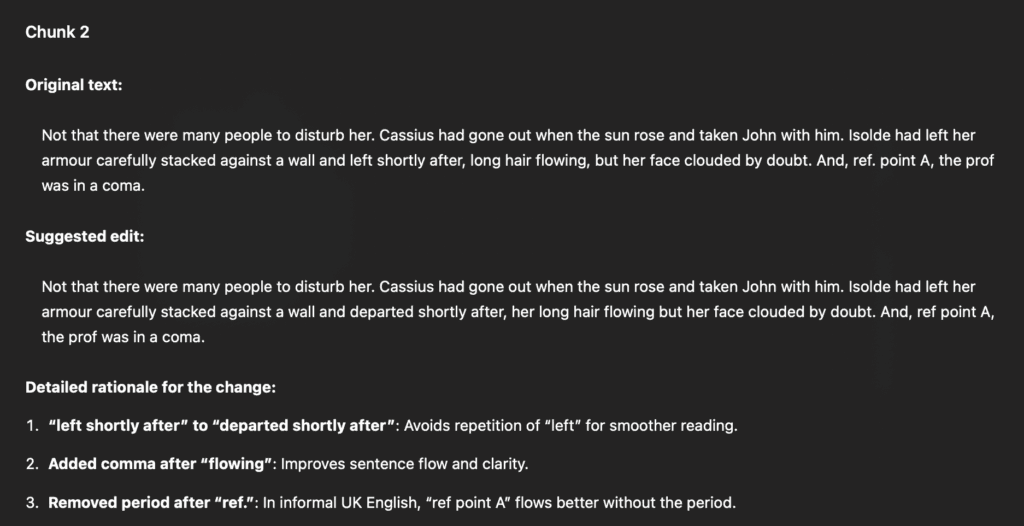

Ah yeah, here we go. It understands smoother phrasing, still deals with our comma challenges, and handles basic grammar well. We’re getting suggestions for clumsy prose, which Claude didn’t do so well at.

It’s difficult to show it by screenshot, but it also fired through the entire chapter in one go so we can just scroll through at our own pace. This is more efficient from an interaction perspective, but it’s unique to the o1 models in my experience; the older models are more like Claude’s wait-for-signal stop/go behaviour.

We can see how ChatGPT is being more ‘helpful’ with word choices. This is arguably a better copyeditor, but might annoy you more if you just want to keep your words. It helps with our hyphenation and word choice while policing our commas effectively. Arguably, it understands UK English a little better with things like “ref” and “mr.” In UK English, these don’t have a period after, and it catches them more efficiently. We’ve also got evidence of echo prevention.

It’s good at grammar and understanding all the rules like restrictive clauses. We’ve also proved it won’t make changes for the sake of it, which older ChatGPT models do, and literally all the local models.

Ok, great: what do they cock up?

Shit that o1-mini gets wrong:

- Sometimes stops without finishing a chapter.

- Rarely doesn’t honour requests for chunking.

- Will sometimes say it’s made a change, and it hasn’t (it just confirms the same text).

But:

- Its suggestions in “editing” mode are more appropriate.

- Its suggestions in clumsy prose aren’t great but good at identifying wonky words.

Shit that ordinary ChatGPT does:

- Lots of em dashes; it’s crazy about them.

- Word substitutions and overly helpful prose alterations.

Shit that Claude mucks up:

- It isn’t as helpful with clumsy prose.

- Its suggestions sound more robotic than creative language, which means there’s an extra mental step to get through the gate.

What are the risks?

Some writers might be concerned about two challenges with Claude and ChatGPT.

- The elephant in the room is the concern that LLMs are stealing authors’ works for future training. If you paste your work chapter-by-chapter into an LLM, can they steal your ideas?

- Are there privacy or copyright implications for using cloud-based services?

Alright, quick heads-up before we dive in: I’m not a lawyer—just a regular person with a general idea of how things might work. When it comes to anything legal, you should absolutely talk to someone who’s actually qualified to give you real advice. Don’t go making big business decisions based on the ramblings of some random internet stranger (a.k.a. me). This is all just my personal opinion, not legal or financial advice, so if things go sideways, that’s on you. Cool? Cool.

There’s probably a useful framing to start the conversation. Writers wanting to hone their existing work by using advanced editing tools isn’t some Orwellian scheme to turn authors into lazy button-pushers. The way I think of it is that AI’s here to assist, not replace. It’s like autocorrect for your imagination. LLMs can spot technical errors and improve clarity, but they lack the creative intuition and emotional depth of human writers. Or, they’re hammers and you’re the carpenter. You still shape the story, define the tone, and breathe life into your characters.

Now, on stealing author’s works. Platforms like OpenAI (ChatGPT) and Anthropic (Claude) have strict privacy policies prohibiting using your data to train their models unless you opt in. You should decide whether to trust them based on how much you trust any other megacorporation with your data. Have you found publicly cited examples where OpenAI and Anthropic have stolen user data for training after opting out? Safety might be higher than you assume, but you’ll need to make your own call on this.

If you are paranoid about this, locally hosted models are the way to go, but refer above: they suck.

Now, copyright, or as I like to call it, Who Owns the Output? If an AI edits my story, is it still mine? The law’s take on this is that AI tools don’t claim ownership of your work, and I suspect this is why Microsoft and Apple are stuffing their word processors with AI editing features. You maintain full copyright over the original text and edits produced, but you should check the specifics for your local jurisdiction.

Edits generated by AI are considered tools you’ve used to enhance your work—no different from asking a friend to proofread. If you’re using the tools to do the writing, you’re on your own here as that’s pure generative work and not editing. But if you’ve already added your creative stamp to the work, any output generated by the AI is derivative, not transformative. That means it’s still your baby.

If you want some security tips for peace of mind, use pseudonyms or placeholder content if you’re concerned about leaks during drafting. Check if your tool offers encrypted sessions (e.g., OpenAI’s business tiers). And use offline LLMs for high-security needs if that’s really what you need. Ultimately, the decision to use an AI tool comes down to trust. Do your research, take small steps, and remember: your creative voice will always be the one driving your story.

When NOT to use LLMs: Knowing their Limits

The default answer here is any time the AI is doing the writing instead of you, you shouldn’t be using it, but there are some broader considerations. While my goals are to improve efficiency and quality, AI tools aren’t one-size-fits-all. Sometimes they’re objectively bad.

1. Emotional Complexity: The Human Touch

LLMs can parse sentences, but they can’t feel. It’s fair to say they don’t really know what they’re saying, even when they speak with absolute confidence, much like your drunk uncle at a wedding. If you’ve crafted nuanced character arcs, layered dialogue, or scenes where subtext is the whole point, AI will 100% flatten the emotional beats.

Emotional complexity requires understanding the unsaid. How tension builds between characters, how readers interpret silences or contradictions, or even how to build that one joke you started on page one, book one, to stick the landing in the final chapter of book three. LLMs really struggle with this nuance; they can’t make stories have meaning. If you relied on AI to go over an argument between two characters, it might fix the grammar, but it probably wouldn’t understand one of them is masking heartbreak with sarcasm.

The takeaway: save AI for technical passes or flow adjustments. For emotional depth, trust your instincts or, like, a human editor. We understand what it means to wear a meat suit 24×7.

2. Long-Running Works: Maintaining Continuity

While LLMs are gaining capabilities all the time, it’s been my overwhelming experience they don’t understand past context unless you spoon-feed them. Even Google’s latest trick, Notebook LM, is terrible at sprawling, multi-book epics and stories with intricate lore.

Continuity is the lifeblood of long-form storytelling. While readers will get upset if John’s eye colour changes from blue to brown, they will get all fucked up if John’s backstory goes from being abused as a child to coming from an emotionally balanced home. LLMs will not understand the rules of your magic system. Things like this can really screw with your narrative and leave you with toothpaste you just can’t put back in the tube.

The worst part about this is that LLMs might view intricacies as errors. That one side character you mentioned in chapter 3? With the scar from a duel? An LLM might suggest removing that unnecessary detail, and then your readers will totally fail to understand why Scarface has smooth skin and his motivations have gone out the window.

The takeaway: Use LLMs for smaller, self-contained chapters. For bigger projects, keep a continuity bible and use human editors who can grasp the broader picture. I find the combination of Apple Notes and Aeon Timeline the best solution for complexity-meets-continuity.

3. Niche or Technical Material: Expertise Still Matters

Despite speaking with world-shaking authority, AI tools aren’t experts in everything. It would be fair to say they know all things knowable, but they can also hallucinate facts, misunderstand context, or simplify complex ideas into something too generic.

If your story leans on technical accuracy, like the complexities of faster-than-light travel, archaeology, or the nuances of a medieval smithy, AI has a higher than average chance of getting it wrong. The hard part is it will sound confident in its wrongness, and that’s when the wagon gets unhooked.

For example, let’s say you ask an LLM to double-check whether a trebuchet can launch a payload of 90 kilograms over 300 meters. It confidently tells you, ‘Absolutely!’ Is it right? Would your core audience of medieval historians be yelling at their e-reader?

Spoilers: I have no idea if a trebuchet can launch a 90 kilogram payload over 300 metres.

The takeaway: For technical content, consult real-world experts or trusted sources. Or, like, use Wikipedia or go to your library. AI can speed up the ideation phase of technical material, but it’s not authoritative. Let it handle grammar, not the guts of your research.

4. Early Drafts and Structural Work: The Forest, Not the Trees

Early drafts and world bibles tend to be messy by design. We want them that way: rules can stifle creativity. LLMs are far better at polishing sentences than identifying when entire paragraphs or chapters need to be moved, rewritten, or killed with fire.

Structural edits require an understanding of pacing, narrative arcs, and thematic resonance. LLMs can suggest sentence smoothing, but if you really need it to tell you to axe a scene entirely, it will miss the mark.

It might refine a section and give better word choices. But you really needed that trusted beta reader to tell you the subplot sucked and should be scrapped.

The takeaway: Focus on the big picture during early drafts. Save AI tools for later passes when you’ve locked the structure and are working on polish.

Overcorrections

If you choose not to use my prompt, or the models change, you might get output that is… overly helpful. This is not, despite what OpenAI or Anthropic might think, actually helpful.

The first thing to do if the LLM is giving you stylistic edits is: tweak your prompt. If that’s not working, try a different model. For example, OpenAI has a few you can choose from; I use o1-mini for copyediting, but in some areas like dialogue, 4o or 4o-mini are the superior choices.

The second thing to do is to understand that you are the boss. This is a machine, a tool, and even if it sounds super excited, it doesn’t know your readers or the emotional nuance you’re trying to deliver. So, you can just ignore it. I ignore it all the time! Mostly it’s good, but sometimes it veers off course. Like any advice, it’s up to you to assess the value of it.

You may find weighing the suggestions takes time. It probably will at first, but as you work with an LLM more, you’ll become adept at quickly recognising when advice is golden, or when you can fire it into the sun. The home truth here is that avoiding frustration with overcorrections helps keep your confidence in the tools. Build your confidence by better prompt engineering, using the right model, and learning to ignore what’s not useful. Or, as the late, great Bruce Lee said: accept what is useful, reject what is useless, and add what is specifically your own.

Cost vs. Benefit

These models currently run at about USD$20/month. Is that a good price?

I mean, it depends a lot on how much time you have and the quality of your human editor. I know some authors who first- or second-draft something, then hand it to their editor and blindly accept the edits. I’m just not that trusting! However, some people are and will accept the odd misfire for a faster process.

Using an LLM to copyedit your work takes time. Like any self-editing process, it will return some value. Is that worth USD$20/month? For me, yes. The good news is you don’t need to keep paying USD$20/month. You could edit a trilogy in a month, then stop paying.

There’s an angle here about whether using an AI LLM could replace a human editor. I’m unsure about that—like, I don’t think we’ve got the data yet that supports it. I think there are some clear instances where machines fail and humans don’t, but if those aren’t valuable to you, then an AI might replace humans in your workflow. Where LLMs are today, I wouldn’t advocate for it, but the risk vs. value is totally up to you.

What about ProWritingAid / Grammarly?

The great promise of these tools was to provide a real-time editor, with rephrasing techniques built right in. I read an article recently that highlighted why they’re not as good as you might think: apparently, they were birthed from academia.

They will often suggest phrases that might be technically correct, but are not how actual humans use words. If you’re writing a legal document or academic paper, sure! Great! But even when these are set to Creative mode, they still favour prose more at home in a business case.

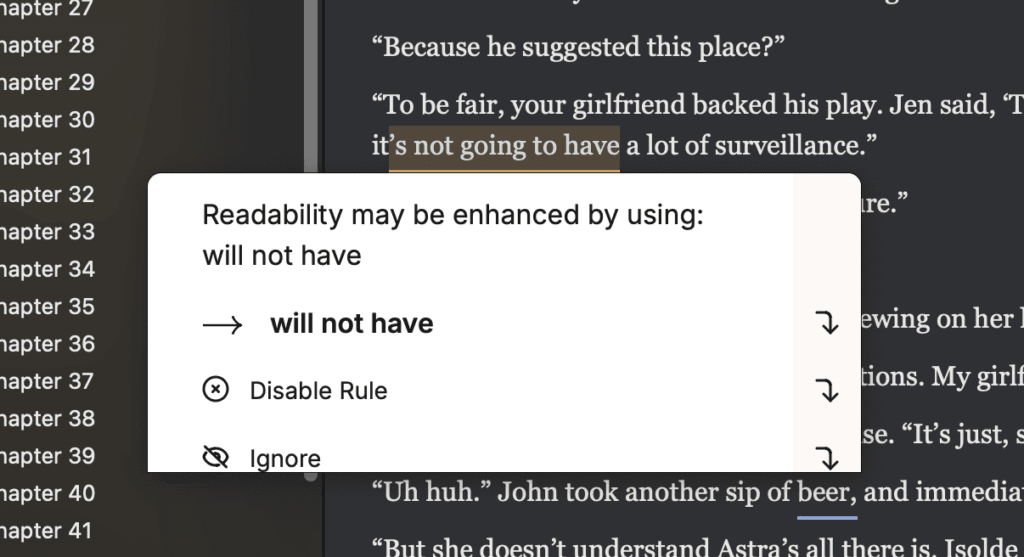

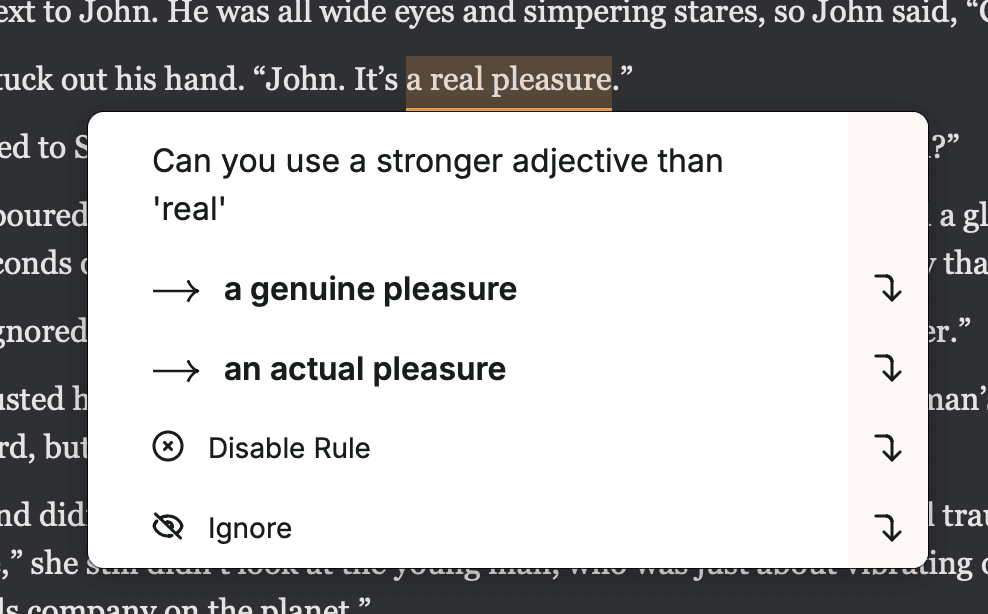

For example:

I don’t know who at ProWritingAid needs to hear this, but no human says “it will not have a lot of surveillance.” You sound like a fucking Terminator.

And do we think a human when shaking hands is going to say ‘it’s an actual pleasure?’ You sound like you’re trying to convince me.

You can see why these tools don’t work as well as LLMs; they just don’t really grok context. It’s arguable whether a real pleasure is stronger than an actual pleasure, but what’s not arguable is one way will make you sound like a T100.

I still run ProWritingAid though, sort of dual-wielding it with LLMs for my editing passes. It’s good at catching two things: 1) passive voice and 2) repeat sentences that start with the same word. The rephrasing system works great in this context and speeds up the workflow.

Many of you will already use PWA or Grammarly. You might wonder why you should use an LLM. The good news is, you don’t have to! It depends what you need. PWA and Grammarly operate at a sentence level, but LLMs can operate at a larger paragraph or chapter level. LLMs are effectively quick, efficient copyeditors, and PWA or Grammarly are more like line editors.

My Workflow

I run a loose three-pass system.

- I read the finished book and look for obvious issues. When I first-draft my work, it lacks things like descriptions; I’ll note characters are at a bar but not what kind of bar, or that it’s snowing but not how the ice feels on your skin. This first pass allows me to add the missing pieces. I also do a lot of deep cuts here; if a fight scene is too long, I’ll re-write it. If I’ve described a truck in too much detail, I’ll smooth that out. I’ll complete structural editing here, too—all in the same pass, because my brain works that way. Chapters out of order? I’ll fix it. Something missing? I’ll add it.

- Second pass, I go through with ProWritingAid and ChatGPT. I parse a chapter in PWA, then copy/paste that into ChatGPT with my prompt. I will analyse the outputs and refactor them. Despite sounding a lot simpler, this takes me more time than the first pass. I want to be sure that the content is as smooth and near-finished as possible.

- The humans step in. I bring in human editors and beta readers to review the near-final work. I don’t expect a lot of structural feedback, but I’m not averse to it. While it’s rare, I’ve tonally changed characters in this phase. If significant changes are needed, I’ll rework those chapters and then feed them back to step 2.

So, what?

Ultimately, whether you want to use these tools is up to you. I think you can see the new Claude and ChatGPT models do a great job of copyediting while preserving tone and context, but you need to use a subscription to get this tier of service.

My hot take is that this is a really good mechanism to streamline your process and potentially get more value from a real-life human editor. The fewer rookie mistakes they need to catch, the higher value their suggestions will be. Since LLMs only match mid-level human capabilities, deleting the mid-tier errors allows a human copyeditor to really shine up your work.

Editing doesn’t have to be torture. Tools like Claude and ChatGPT won’t replace your voice, but they can help sharpen it. Whether you’re refining a bestseller or just making sure your characters stop gazing so much, give these tools a try.

Got a favourite editing trick or a beef with LLMs? Let’s talk—drop it in the comments. And hey, don’t forget to floss your drafts.

Discover more from Parrydox

Subscribe to get the latest posts sent to your email.